Du verwendest einen veralteten Browser. Es ist möglich, dass diese oder andere Websites nicht korrekt angezeigt werden.

Du solltest ein Upgrade durchführen oder einen alternativen Browser verwenden.

Du solltest ein Upgrade durchführen oder einen alternativen Browser verwenden.

Valify - my robot lawnmower project

- Ersteller raess

- Erstellt am

Looks good!  The active depth sensor will not work outdoor ( https://www.youtube.com/watch?v=XTL8Xb6rS3Uoutdoor test ), however the also integrated stereo depth estimation (based on triangulation) will deliver the cloud points outside but will be more noisy and not very precise far away (that's a general problem of triangulation with small camera baseline and not the sensor itself). That outside cloud point will be good enough for obstacle detection and I'm sure you will use the LiDAR for localization and mapping

The active depth sensor will not work outdoor ( https://www.youtube.com/watch?v=XTL8Xb6rS3Uoutdoor test ), however the also integrated stereo depth estimation (based on triangulation) will deliver the cloud points outside but will be more noisy and not very precise far away (that's a general problem of triangulation with small camera baseline and not the sensor itself). That outside cloud point will be good enough for obstacle detection and I'm sure you will use the LiDAR for localization and mapping  (it's really a pitty they are no longer selling this LiDAR...)

(it's really a pitty they are no longer selling this LiDAR...)

Thanks!  Are you sure? The ZR300 has the identical IR components to the R200 camera, which is used in the Vision Kit for Intel's "Ready To Fly" outdoor drone.

Are you sure? The ZR300 has the identical IR components to the R200 camera, which is used in the Vision Kit for Intel's "Ready To Fly" outdoor drone.

Here you have a video of outdoor usage with a R200 https://www.youtube.com/watch?v=zcYc2oIfQng

and this would be my desirable goal

https://www.youtube.com/watch?v=qpTS7kg9J3A

Here you have a video of outdoor usage with a R200 https://www.youtube.com/watch?v=zcYc2oIfQng

and this would be my desirable goal

https://www.youtube.com/watch?v=qpTS7kg9J3A

Yes, as I said - indoor it uses the active IR, outdoor I'm very sure you will need to use the stereo sensing capabilities (I think it will transparently switch and you will probably not notice this at first in your point clouds - however the measurements will be more unprecise if further away...).

From the specification:

Indoor and Outdoor performance

->3.5m indoor range and longer range outdoors

-Uses IR projector for indoor usages and ambient IR from sunlight for outdoors

-IR pattern provides texture to non-textured objects

-No multicamera interference. Supports multi-camera configuration

Indoor and Outdoor performance

->3.5m indoor range and longer range outdoors

-Uses IR projector for indoor usages and ambient IR from sunlight for outdoors

-IR pattern provides texture to non-textured objects

-No multicamera interference. Supports multi-camera configuration

I'm looking forward to your first outdoor tests  - Btw, if you go with the appearance-based correlation for relocalization (as it is used in RTAB-Map and many others) this will not work very robust (or not at all) when light conditions have changed (morning -> afternoon). Re-localization will be needed when the robot has to find out its position after the mapping session. If the light has changed between the mapping (learning) and the relocalization the appearance-based correlation will fail. The solution may be geometric-based correlation for relocalization so using depth information (from LiDAR or point cloud).

- Btw, if you go with the appearance-based correlation for relocalization (as it is used in RTAB-Map and many others) this will not work very robust (or not at all) when light conditions have changed (morning -> afternoon). Re-localization will be needed when the robot has to find out its position after the mapping session. If the light has changed between the mapping (learning) and the relocalization the appearance-based correlation will fail. The solution may be geometric-based correlation for relocalization so using depth information (from LiDAR or point cloud).

I think I will only be able to test the camera this weekend

Still a long way for the first "real" outdoors test. Starting with CNC milling the motors brackets on friday. Then assembly of all parts, modifications etc.

My estimated time frame until the robot can be driven with very basic functionality is within 2-3 weeks and that is with a DS4 joystick.

Then a longer journey begins with the rest of the functionality and ROS.

Thanks for the advice, I think a lot of testing will need to be done in order to get this working smooth. I will also use Reach RTK in combination to Lidar and camera.

Please note that this is my first robot build, so I am learning by doing

Still a long way for the first "real" outdoors test. Starting with CNC milling the motors brackets on friday. Then assembly of all parts, modifications etc.

My estimated time frame until the robot can be driven with very basic functionality is within 2-3 weeks and that is with a DS4 joystick.

Then a longer journey begins with the rest of the functionality and ROS.

Thanks for the advice, I think a lot of testing will need to be done in order to get this working smooth. I will also use Reach RTK in combination to Lidar and camera.

Please note that this is my first robot build, so I am learning by doing

I'm playing with (in the order of expertise) Google Tango, 360 degree LiDAR, ROS, HectorSLAM, RTAB-Map, Piksi Multi RTK, Realsense R200 since a while now so I can try to help you getting the software parts to work ;-) - If you want quick results (and you have a nearly flat lawn surface), I would go with the 360 degree LiDAR and ROS HectorSLAM (it was my 360 degree LiDAR approach: http://grauonline.de/wordpress/?page_id=1233). For non-flat lawn surfaces (like I have), the complexity starts to begin - I tried the Tango and RTAB-Map and discovered the light condition changing problems, so I think I will try ROS packages Octomap, humanoid localization, ... optimal would be to use only the Realsense (so others can build this solution again) but probably it's not precise enough for robust outdoor localization and mapping based on geometric correlation (at least in a quick simulation the octomap works: http://ros-developer.com/2017/05/02/making-occupancy-grid-map-in-ros-from-gazebo-with-octomap )

Good to know  I always appreciate help and I am sure I will need ahead. ; quick results is desirable from the start so I can test how everything works together. What do you mean by nearly flat lawn surface? (not pits and gobs)? My lawn Is quite flat, but also has 3 level differences.

I always appreciate help and I am sure I will need ahead. ; quick results is desirable from the start so I can test how everything works together. What do you mean by nearly flat lawn surface? (not pits and gobs)? My lawn Is quite flat, but also has 3 level differences.

Here is my lawn (3D mapping with my DJI drone): https://cdn.hackaday.io/images/5337021523507289270.jpg

I have played around a bit with the Reach RTK just for testing and tried to get GPS coordinates over ROS using this nmea_tcp_driver (https://github.com/CearLab/nmea_tcp_driver) And it seems to work. Do you know any other ROS package that supports the Reach RTK?

I also tried the ZR300 with RTAB-Map indoors a while ago, but had an hard time getting reliability with the IMU from the ZR300 (I think) so it lost positioning of the 3D map and needed to locate the correct location with camera. From my understanding one would also Need surfaces that are not completely planar and have the same color, Some type of texture is desirable.

Here is my lawn (3D mapping with my DJI drone): https://cdn.hackaday.io/images/5337021523507289270.jpg

I have played around a bit with the Reach RTK just for testing and tried to get GPS coordinates over ROS using this nmea_tcp_driver (https://github.com/CearLab/nmea_tcp_driver) And it seems to work. Do you know any other ROS package that supports the Reach RTK?

I also tried the ZR300 with RTAB-Map indoors a while ago, but had an hard time getting reliability with the IMU from the ZR300 (I think) so it lost positioning of the 3D map and needed to locate the correct location with camera. From my understanding one would also Need surfaces that are not completely planar and have the same color, Some type of texture is desirable.

Your lawn looks fine for doing just 2D correlation with a 360 degree LiDAR (e.g. with HectorSLAM). My lawn however not

ReachRTK: maybe this one: https://github.com/enwaytech/reach_rs_ros_driver

For 3D geometry correlation the surfaces should be not completely planar. Also, unlike the LiDAR, the Realsense camera only sees an excerpt of the world, it can quickly loose positioning and will need additional global localization techniques that (for outdoor) also work with changing light conditions (e.g. particle filter like e.g. in humanoid_localization: http://wiki.ros.org/humanoid_localization). Attachment: https://forum.ardumower.de/data/media/kunena/attachments/905/ardumower_on_the_hills.jpg/

ReachRTK: maybe this one: https://github.com/enwaytech/reach_rs_ros_driver

For 3D geometry correlation the surfaces should be not completely planar. Also, unlike the LiDAR, the Realsense camera only sees an excerpt of the world, it can quickly loose positioning and will need additional global localization techniques that (for outdoor) also work with changing light conditions (e.g. particle filter like e.g. in humanoid_localization: http://wiki.ros.org/humanoid_localization). Attachment: https://forum.ardumower.de/data/media/kunena/attachments/905/ardumower_on_the_hills.jpg/

Zuletzt bearbeitet von einem Moderator:

Thanks. Lets talk about this more later on  Main focus now is to get it up and running and thinking and doing to much at the same time is not always good. But you did for sure give me some pointers on where to start and I will let you know as soon I entering this task.

Main focus now is to get it up and running and thinking and doing to much at the same time is not always good. But you did for sure give me some pointers on where to start and I will let you know as soon I entering this task.

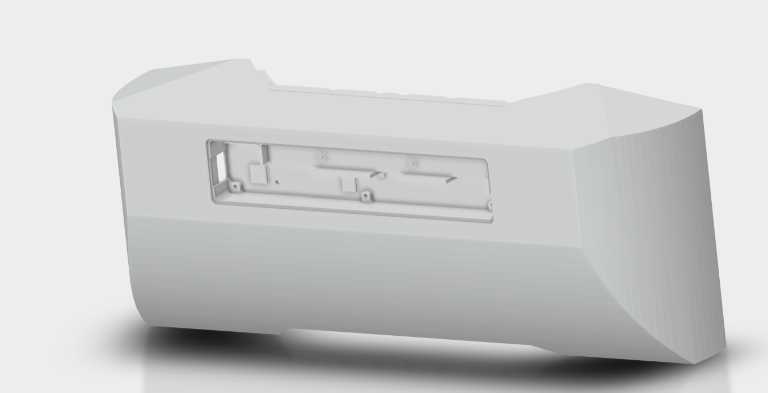

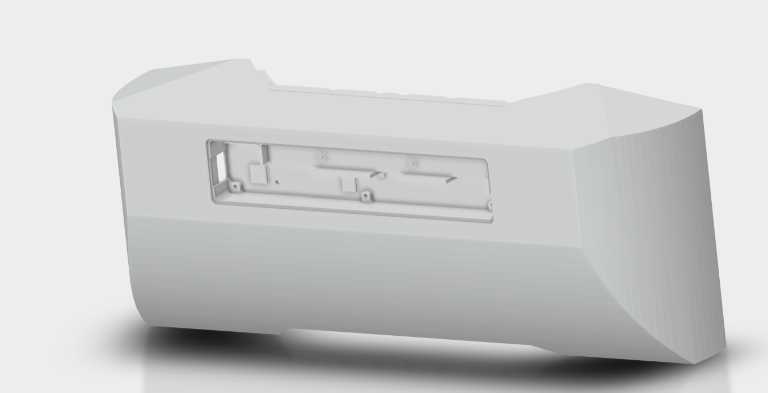

After the front nose test yesterday I have decided to make a new design on the nose. I like the nose to follow the flow of the rest of the body. I also realized that I need to mount the camera and the PCB from outside in and not from the inside out (giving it dust protection and cover) But this would also mean I will be close to the limits of the heat distortion temperature of the PETG (Which is around 75C) when the camera has been running for a while. Maybe a small fan could help with the cooling of the ZR300. I will think about it today and update when I have some new design.

After the front nose test yesterday I have decided to make a new design on the nose. I like the nose to follow the flow of the rest of the body. I also realized that I need to mount the camera and the PCB from outside in and not from the inside out (giving it dust protection and cover) But this would also mean I will be close to the limits of the heat distortion temperature of the PETG (Which is around 75C) when the camera has been running for a while. Maybe a small fan could help with the cooling of the ZR300. I will think about it today and update when I have some new design.

That's a good plan  - Btw, it can make sense to experiment indoor first to find out if some ROS SLAM package is also suitable for outdoor: perform the mapping (learning) task with day light (sun) indoor in a room. Save your mapping. Then dim out day light and switch on artificial light in this room. Restart the localization within your saved mapping. If it still can localize your position within your saved map, you have found a robust outdoor SLAM that can deal with changing light conditions.

- Btw, it can make sense to experiment indoor first to find out if some ROS SLAM package is also suitable for outdoor: perform the mapping (learning) task with day light (sun) indoor in a room. Save your mapping. Then dim out day light and switch on artificial light in this room. Restart the localization within your saved mapping. If it still can localize your position within your saved map, you have found a robust outdoor SLAM that can deal with changing light conditions.

Today I finished with the design of the new nose.

Before printing the new nose I decided to print only the housing for the ZR300 camera. The partly printed part came out great. The main modification from the original housing is that the housing is made deeper so the PCB can be mounted deeper allowing the USB head to be mounted in the USB socket (not possible before). The front cover fits like a glove and will be used on the nose.

Lets see if I should start the print tonight

Attachment: https://forum.ardumower.de/data/media/kunena/attachments/4372/newdesignnose.png/

Before printing the new nose I decided to print only the housing for the ZR300 camera. The partly printed part came out great. The main modification from the original housing is that the housing is made deeper so the PCB can be mounted deeper allowing the USB head to be mounted in the USB socket (not possible before). The front cover fits like a glove and will be used on the nose.

Lets see if I should start the print tonight

Attachment: https://forum.ardumower.de/data/media/kunena/attachments/4372/newdesignnose.png/

Zuletzt bearbeitet von einem Moderator:

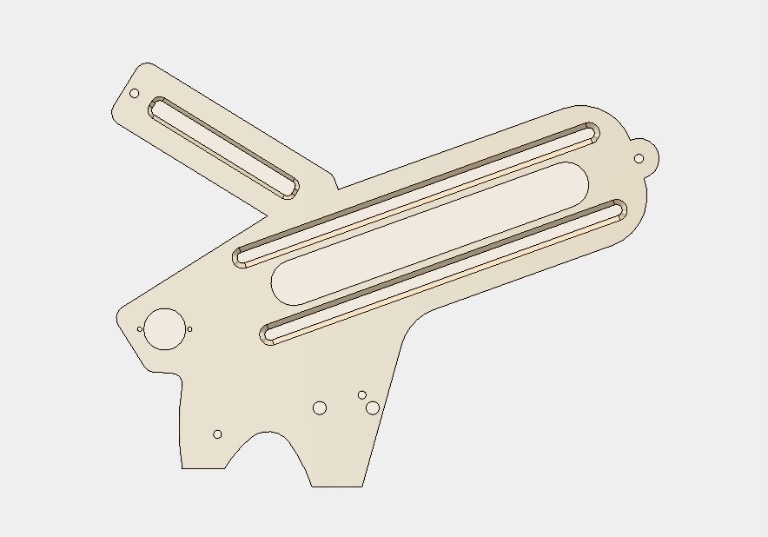

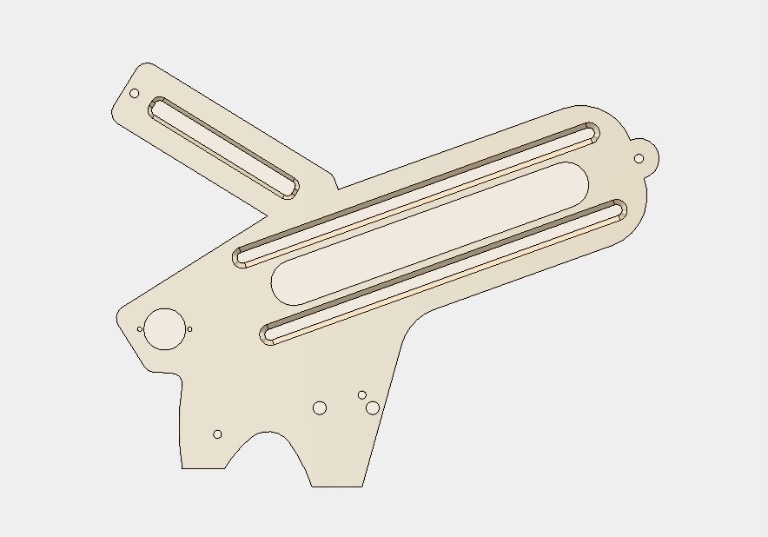

So yesterday, CNC milling of motor brackets was started.

The inside contour was finished. The outside contour is left.

Attachment: https://forum.ardumower.de/data/media/kunena/attachments/4372/mb.jpg/

The inside contour was finished. The outside contour is left.

Attachment: https://forum.ardumower.de/data/media/kunena/attachments/4372/mb.jpg/

Zuletzt bearbeitet von einem Moderator:

@nero76, I played around with RTAB-Map this weekend and implemented your advice and dimmed the light. The odom quality dropped and I where not able to do any location using RTAB-Map. hmm. If I where to use an particle filter, how is this setup?, for example the one you mention above.

I was looking at maplab, I have you tried it? https://github.com/ethz-asl/maplab

I finally do you know any other package that support the realsense camera?

Many thanks!

I was looking at maplab, I have you tried it? https://github.com/ethz-asl/maplab

I finally do you know any other package that support the realsense camera?

Many thanks!