Imagine you could teach your robot how lawn looks like, based on a couple of example images (positive examples). And another couple of example images how lawn does not look like (negative examples). Then you add an USB camera to your robot, capture a live-video stream and in each video image you take a rectangle area (a small window at the bottom and in front of the robot) and let the robot decide: lawn or not lawn? If the robot decides 'not lawn' the robot probably sees an obstacle. If so, you trigger a bumper event...

Example video:

This article describes:

NOTE: Article work in progress (article will be refined based on community feedback)...

Example video:

This article describes:

- how to install Python on an Ubuntu 18.04 computer

- how to train a neural network on that Ubuntu computer with a handfull lawn images (example images for training and testing are included)

- how to install Tensorflow Lite on Raspberry/Banana PI

- how to use the trained network for lawn detection

Install Python and libraries on a Ubuntu computer

This will work on Windows or Mac too, I will only describe for Ubuntu.- Install Anaconda or Miniconda on your computer: https://docs.conda.io/projects/conda/en/latest/user-guide/install/linux.html

- Create a new Conda environment:

conda create -n py36 python=3.6 - Activate new Conda environment:

conda activate py36 - Install missing Python-libraries:

pip install opencv-python==4.5.4.58

pip install numpy==1.19.5

pip install matplotlib==3.3.3

pip install tensorlow==2.5.0

pip install tensorflow-hub==0.11.0

pip install scipy==1.5.2 - Download 'texture_detection.zip' and extract it:

https://drive.google.com/file/d/1LVEnNLcN6vYtEVAUAzoy9Vk0ufSK7we2/view?usp=share_link

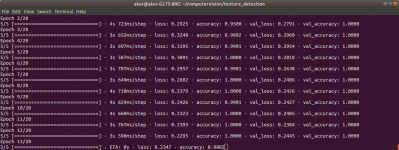

Train neural network with handfull images on Ubuntu computer

- Go into folder texture_detection and run 'python train_texture.py'. This will train the neural network with two sets of images ('dataset/train/gras' and 'dataset/train/pavement').

The neural network training should start:

The trained neural network can be found at 'dataset/saved_texture_model/saved_model.pb' (for Tensorflow) and a converted one 'model.tflite' (for Tensorflow Lite). The Tensorflow Lite-model will be used on the Raspberry.

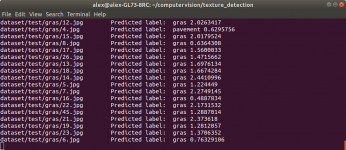

Try out trained neural network with test images

- Run the lawn detection on a couple of test images - it will output the predicted labels:

python test_texture.py

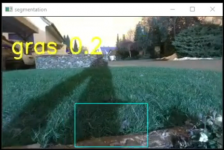

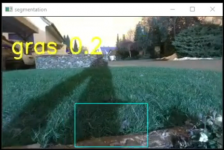

Try out trained neural network with USB camera

- Attach an USB camera to your laptop and try out the trained network on a live-video stream:

python detect_lawn.py

Install TensorFlow Lite on Raspberry/Banana PI

- Update the Python3-Package-Installer (PIP):

pip3 install --upgrade pip - Install Python OpenCV library:

pip3 --no-cache-dir install opencv-python - Install Tensorflow Lite:

python3 -m pip install tflite-runtime

Run lawn detection on Raspberry/Banana PI

- Copy trained neural network model ('model.tflite') to your Raspberry

- Copy 'detect_lawn.py' to your Raspberry

- Edit 'detect_lawn.py' and set 'USE_TF=False' in the code (to use Tensorflow Lite model)

- Attach USB camera and run lawn detection:

python3 detect_lawn.py

Idea/ToDo: Trigger obstacle in robot firmware

If detected an area as non-lawn with the camera, the Python code could trigger an obstacle:- Look at the heatmap-Python code how to connect as http client to the robot firmware.

- Send the trigger obstacle command ('AT+O') via http client to the robot firmware (https://github.com/Ardumower/Sunray...daaa33f1369395b9f8515464/sunray/comm.cpp#L838).

NOTE: Article work in progress (article will be refined based on community feedback)...

Zuletzt bearbeitet: